424 lines

43 KiB

Plaintext

424 lines

43 KiB

Plaintext

{

|

||

"cells": [

|

||

{

|

||

"cell_type": "markdown",

|

||

"metadata": {},

|

||

"source": [

|

||

"\n",

|

||

"*Note: the above pictures are from the Internet*\n",

|

||

"\n",

|

||

"# 1. OCR Technical Background\n",

|

||

"## 1.1 Application scenarios of OCR technology\n",

|

||

"\n",

|

||

"* **<font color=red>What is OCR?</font>**\n",

|

||

"\n",

|

||

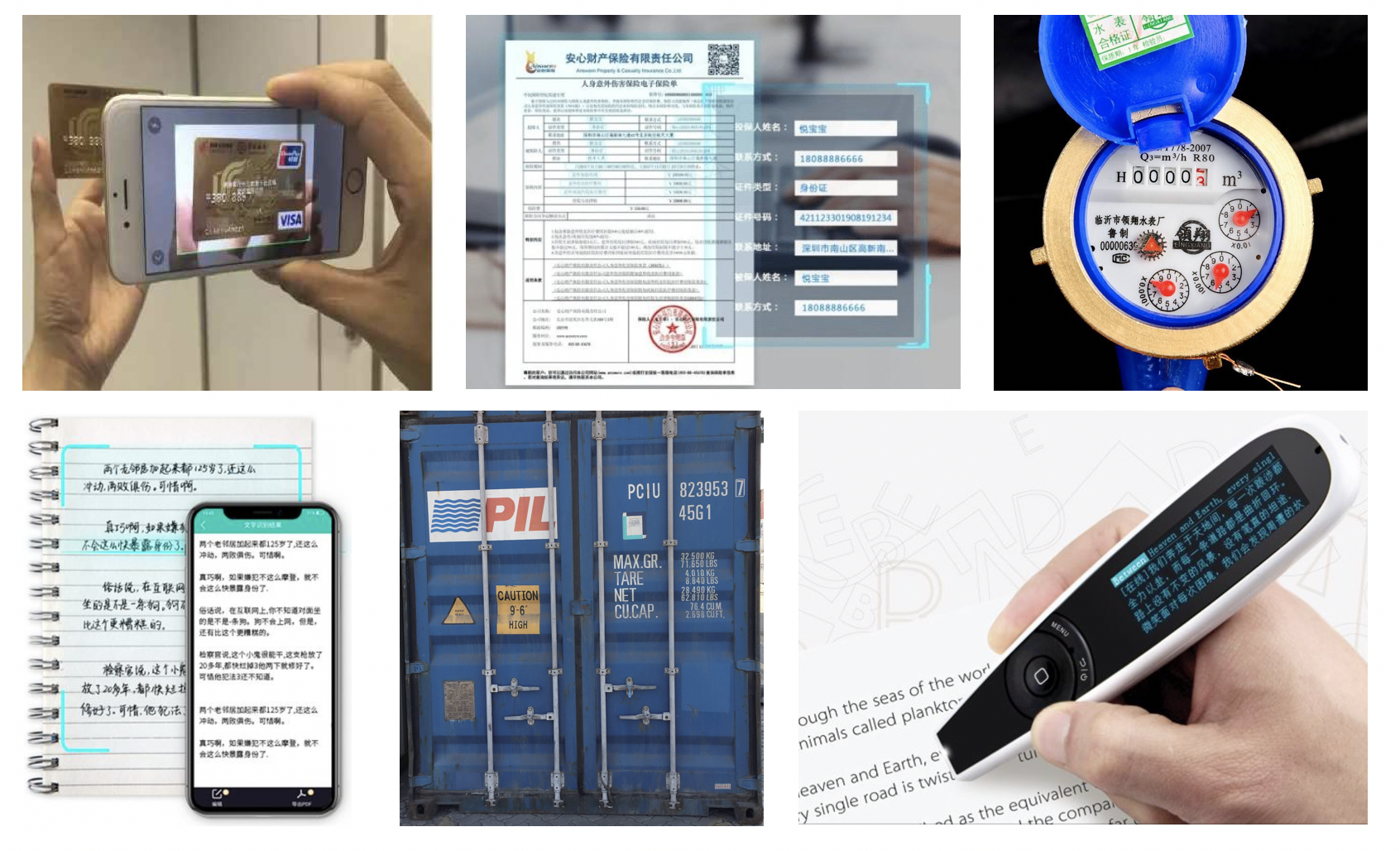

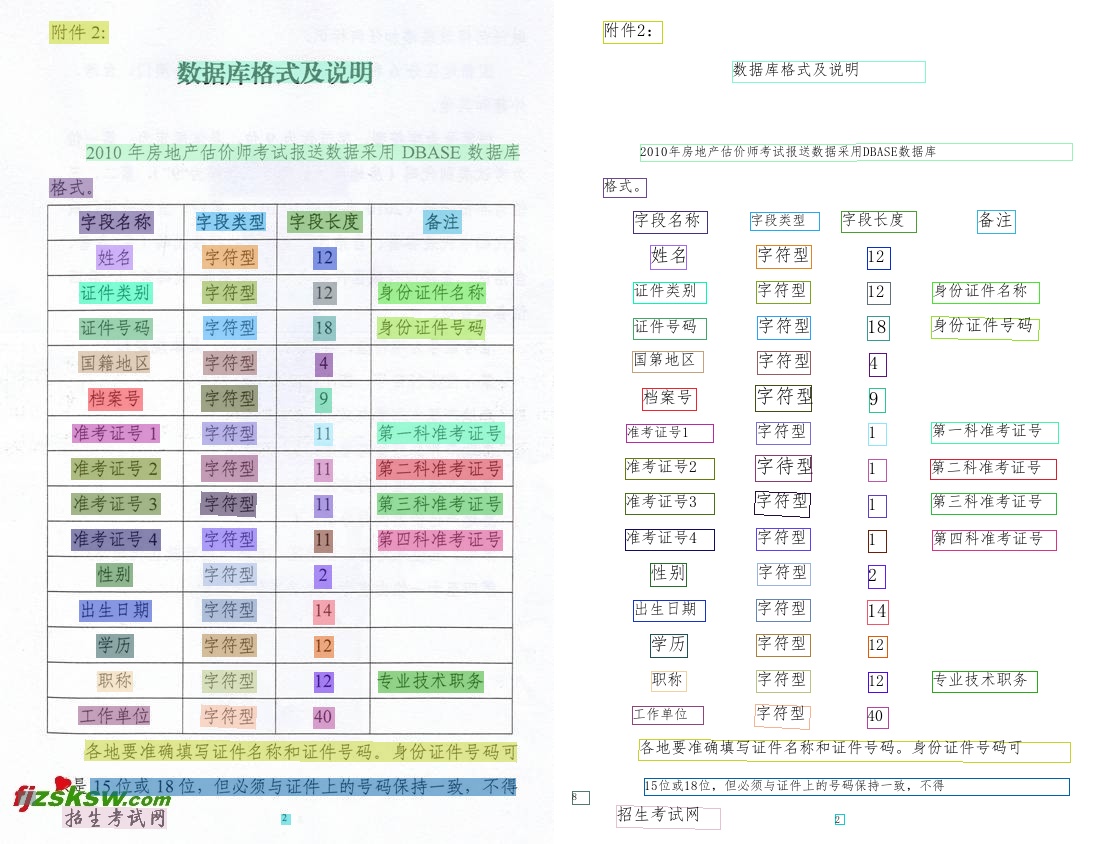

"OCR(Optical Character Recognition)is one of the important directions of computer vision. Traditionally defined OCR is generally oriented to scanned document objects. Now we often say OCR generally refers to scene Text Recognition (Scene Text Recognition, STR), which is mainly oriented to natural scenes, such as the text visible in various natural scenes such as the plaque shown in the figure below.\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure1 Document scene character recognition vs. natural scene character recognition</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"* **<font color=red>What are the application scenarios of OCR?</font>**\n",

|

||

"\n",

|

||

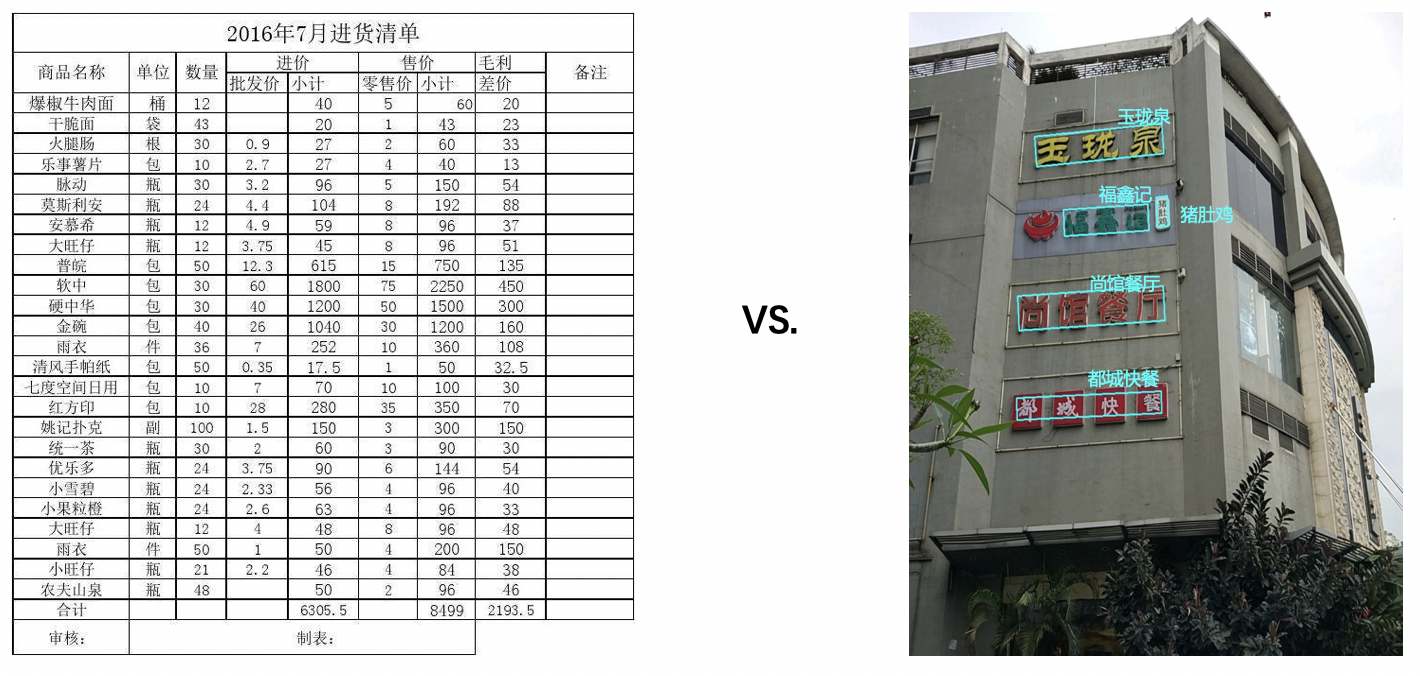

"\n",

|

||

"OCR technology has rich application scenarios. A typical scenario is vertical oriented structured Text Recognition widely used in daily life, such as license plate recognition, bank card information recognition, ID card information recognition, train ticket information recognition and so on. The common feature of these small vertical classes is that the format is fixed, so it is very suitable to use OCR technology for automation, which can greatly reduce labor cost and improve efficiency.\n",

|

||

"\n",

|

||

"This vertical class oriented structured Text Recognition is the scene where OCR is most widely used and the technology is relatively mature.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 2 Application scenario of OCR technology</center>\n",

|

||

"\n",

|

||

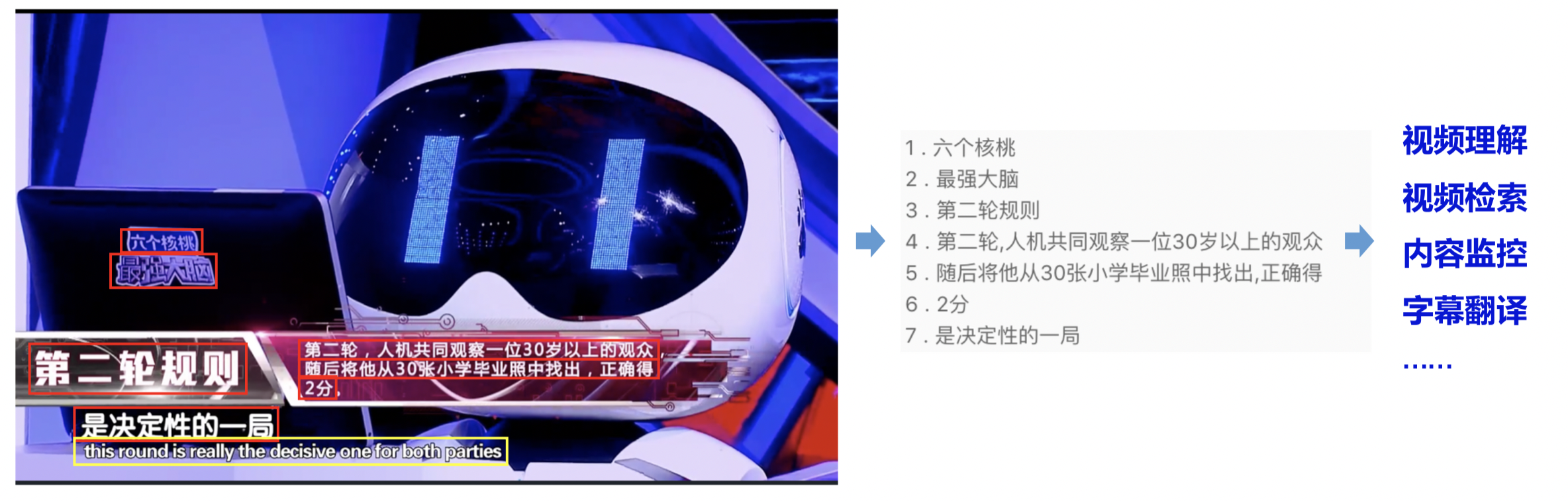

"In addition to vertical class oriented structured Text Recognition, general OCR technology is also widely used, and often combined with other technologies to complete multimodal tasks. For example, in video scenes, OCR technology is often used for automatic subtitle translation, content security monitoring, etc, or combined with visual features to complete video understanding, video search and other tasks.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 3 General OCR in multimodal scenario</center>\n",

|

||

"\n",

|

||

"## 1.2 1.2 OCR technical challenges\n",

|

||

"The technical difficulties of OCR can be divided into two aspects: algorithm layer and application layer.\n",

|

||

"\n",

|

||

"* **<font color=red>Algorithm Layer</font>**\n",

|

||

"\n",

|

||

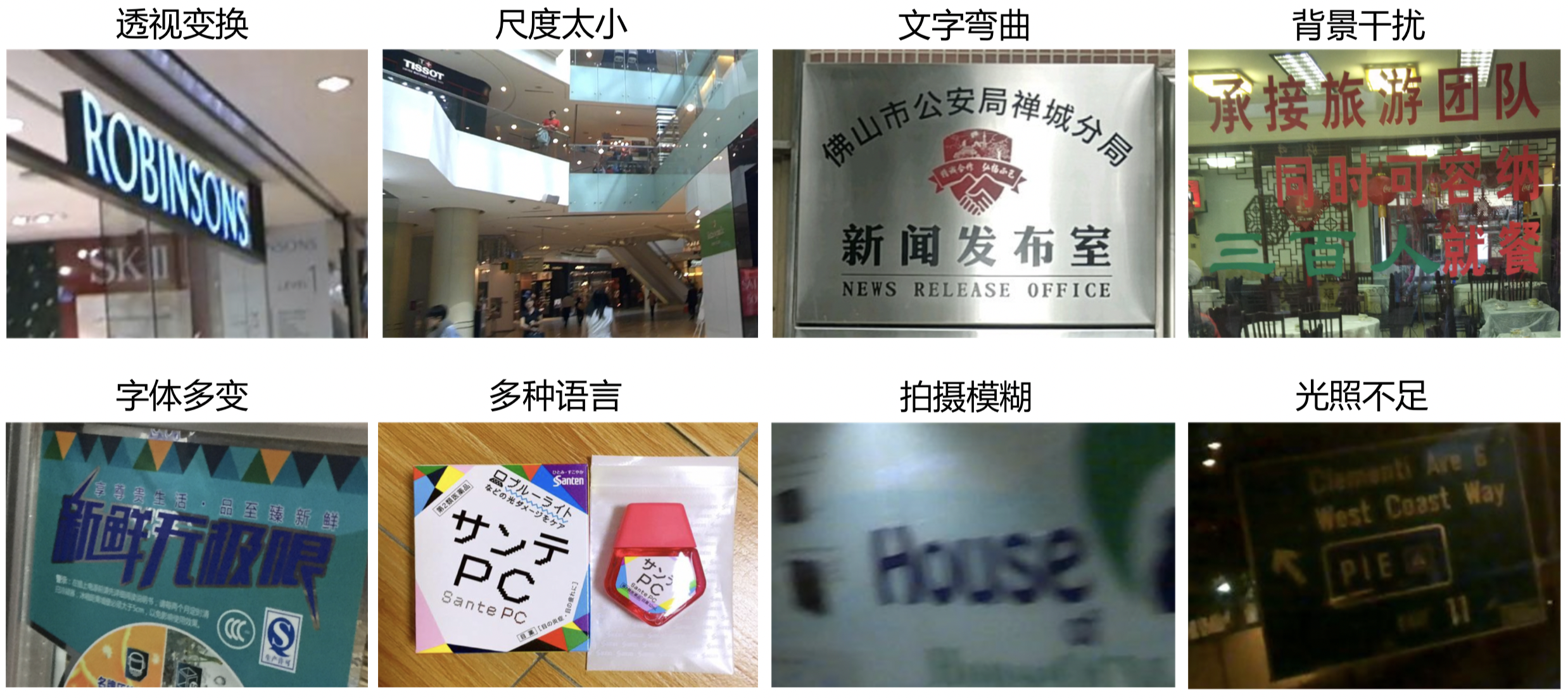

"The rich application scenarios of OCR determine that it will have many technical difficulties. Here are 8 common questions:\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 4 Technical difficulties of OCR algorithm layer</center>\n",

|

||

"\n",

|

||

"These problems have brought great technical challenges to text detection and Text Recognition. It can be seen that these challenges are mainly oriented to natural scenes. At present, the research in academic circles mainly focuses on natural scenes, and the commonly used datasets in OCR field are also natural scenes. There are many researches on these problems. Relatively speaking, recognition faces greater challenges than detection.\n",

|

||

"\n",

|

||

"* **<font color=red>Application Layer</font>**\n",

|

||

"\n",

|

||

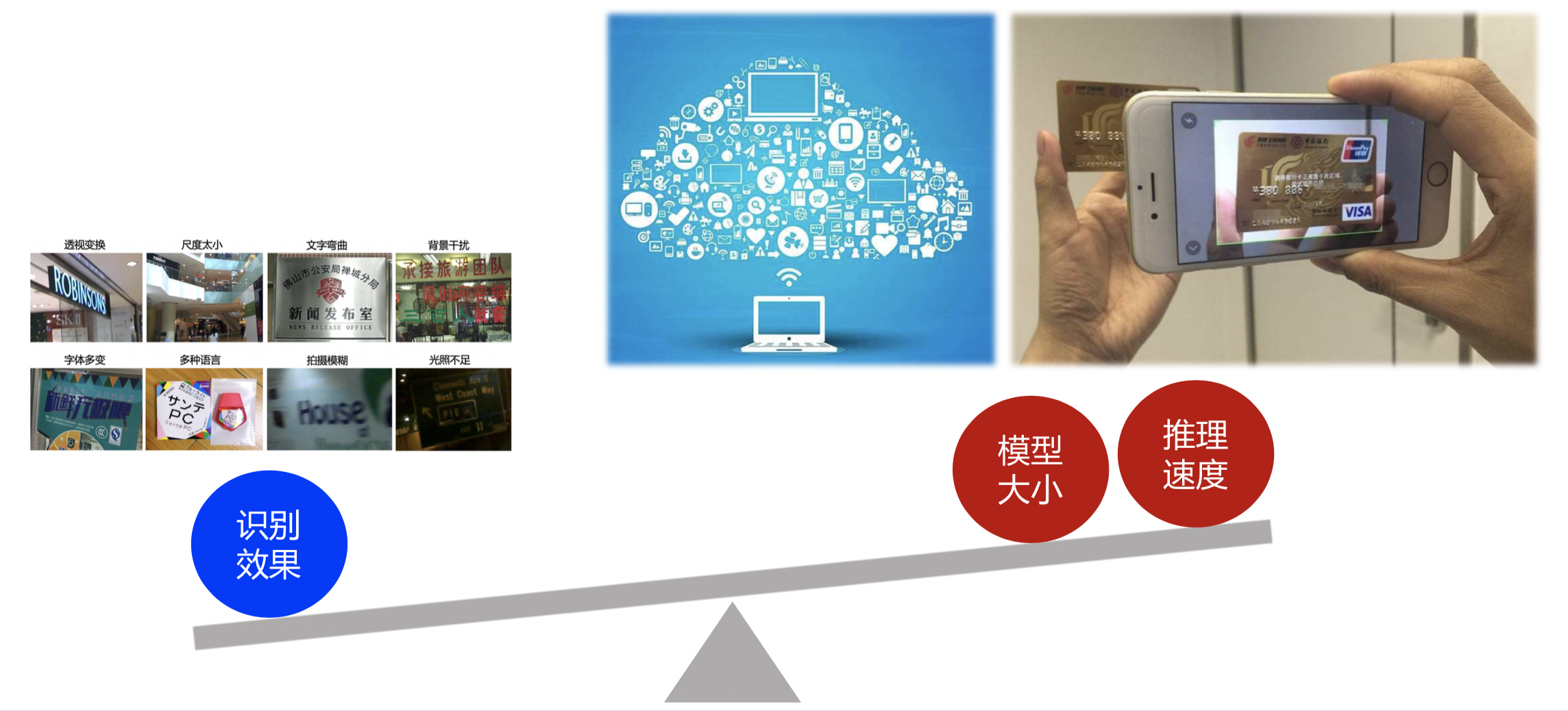

"In practical applications, especially in a wide range of general scenarios, in addition to the technical difficulties at the algorithm level such as affine transformation, scale problem, insufficient illumination and shooting blur summarized in the previous section, OCR technology also faces two landing difficulties:\n",

|

||

"\n",

|

||

"1. **Massive data requires OCR to process in real time** OCR applications often connect with massive data. We require or hope that the data can be processed in real time. It is a big challenge to achieve real-time model speed.\n",

|

||

"\n",

|

||

"2. **End side application requires OCR model to be light enough and recognition speed to be fast enough** OCR applications are often deployed on mobile terminals or embedded hardware. There are generally two modes for end-side OCR applications: upload to the server vs. end-side direct identification. Considering that the way of uploading to the server requires the network, low real-time performance, high pressure on the server when the amount of requests is too large, and the security of data transmission, we hope to complete OCR identification directly on the end side, The storage space and computing power on the end side are limited, so there are high requirements for the size and prediction speed of OCR model.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 5 technical difficulties of OCR application layer</center>\n",

|

||

"\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"metadata": {},

|

||

"source": [

|

||

"# 2.OCR Frontier Algorithm\n",

|

||

"Although OCR is a relatively specific task, it involves many technologies, including text detection, Text Recognition, end-to-end Text Recognition, document analysis and so on. Academic researches on various related technologies of OCR emerge one after another. The following will briefly introduce the related work of several key technologies in OCR task.\n",

|

||

"\n",

|

||

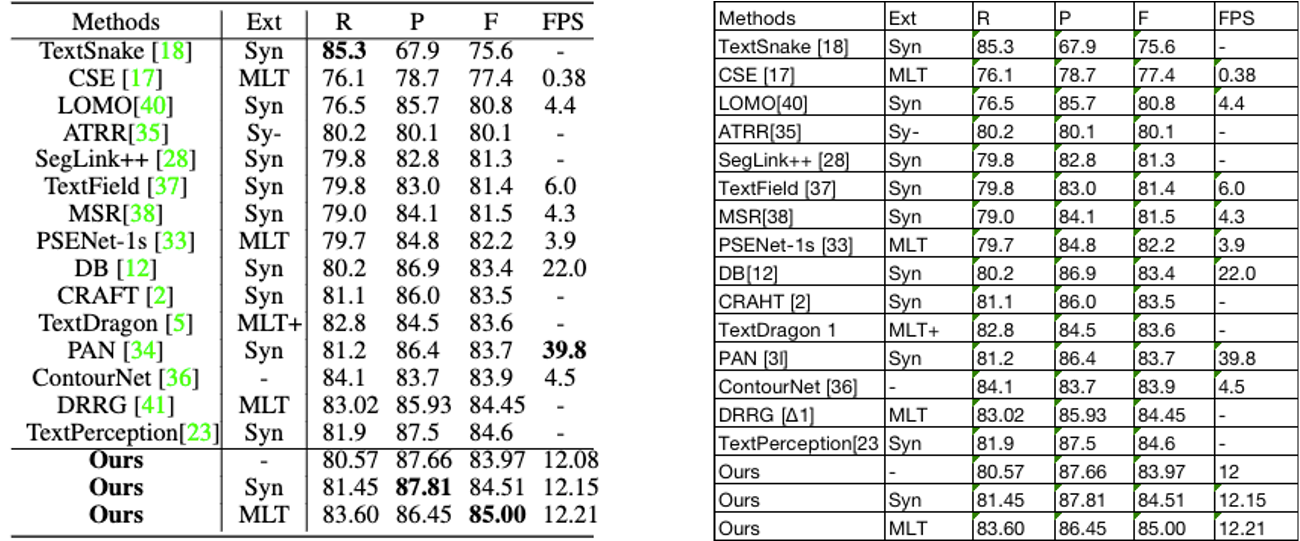

"## 2.1 Text Detection\n",

|

||

"\n",

|

||

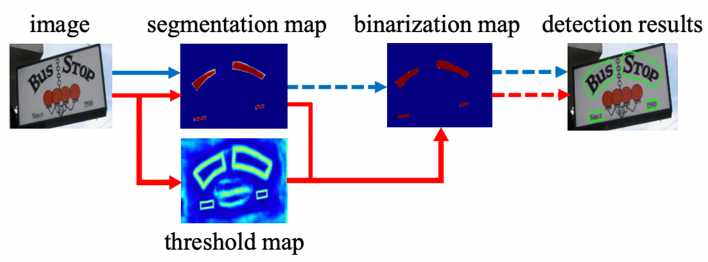

"The task of text detection is to locate the text area in the input image. In recent years, the academic research on text detection is very rich. One kind of methods regard text detection as a specific scene in target detection, and improve the adaptation based on the general target detection algorithm. For example, textboxes [1] adjusts the target frame to fit the text line with extreme aspect ratio based on the one-stage target detector SSD [2], Ctpn [3] is improved based on the fast RCNN [4] architecture. However, there are still some differences between text detection and target detection in target information and task itself. For example, text is generally large in length and width, often in a \"strip\", and text lines may be dense, bending text, etc. Therefore, many algorithms dedicated to text detection have been derived, such as East [5], psenet [6], dbnet [7], etc.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center><img src=\"https://ai-studio-static-online.cdn.bcebos.com/548b50212935402abb2e671c158c204737c2c64b9464442a8f65192c8a31b44d\" width=\"500\"></center>\n",

|

||

"<center>Figure 6 Example of text detection task</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

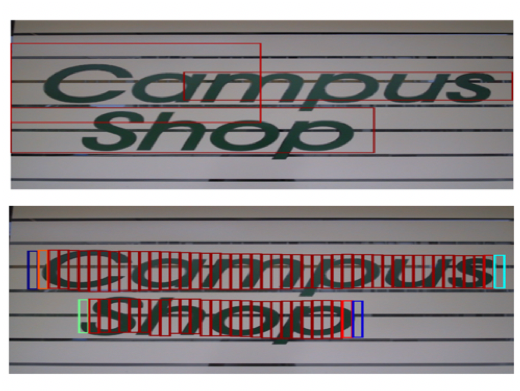

"At present, the more popular text detection algorithms can be roughly divided into two categories: **based on regression** and **based on segmentation**, and some algorithms combine the two. The regression based algorithm draws lessons from the general object detection algorithm. By setting the anchor regression detection box or directly doing pixel regression, this kind of method has better detection effect on regular shaped text, but relatively poor detection effect on irregular shaped text. For example, ctpn [3] has better detection effect on horizontal text, but poor detection effect on inclined and curved text, Seglink [8] is good for long text, but poor for text with sparse distribution; The segmentation based algorithm introduces mask RCNN [9], which can achieve a higher level in the detection effect of various scenes and texts of various shapes, but the disadvantage is that the post-processing is generally complex, so there are often speed problems and can not solve the detection problem of overlapping texts.\n",

|

||

"\n",

|

||

"<center><img src=\"https://ai-studio-static-online.cdn.bcebos.com/4f4ea65578384900909efff93d0b7386e86ece144d8c4677b7bc94b4f0337cfb\" width=\"800\"></center>\n",

|

||

"<center>Figure 7 Overview of text detection algorithm</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"||\n",

|

||

"|---|---|---|\n",

|

||

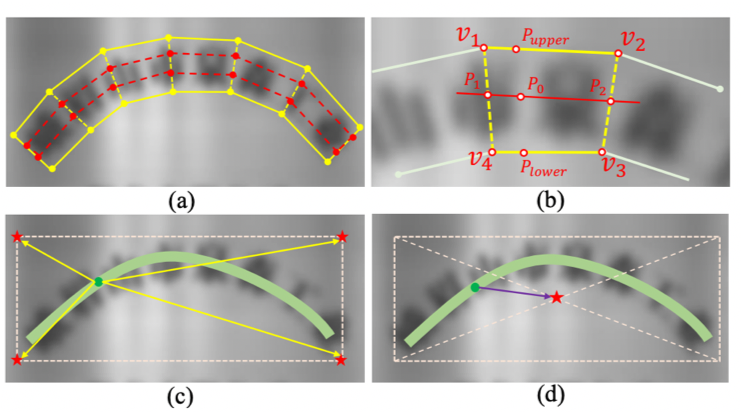

"<center>Figure 8 (left) ctpn [3] algorithm optimization based on regression anchor (middle) DB [7] algorithm optimization based on segmentation post-processing (right) SAST [10] algorithm based on regression + segmentation</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"The technologies related to text detection will be explained and practiced in detail in Chapter 2.\n",

|

||

"\n",

|

||

"## 2.2 Text Recognition\n",

|

||

"\n",

|

||

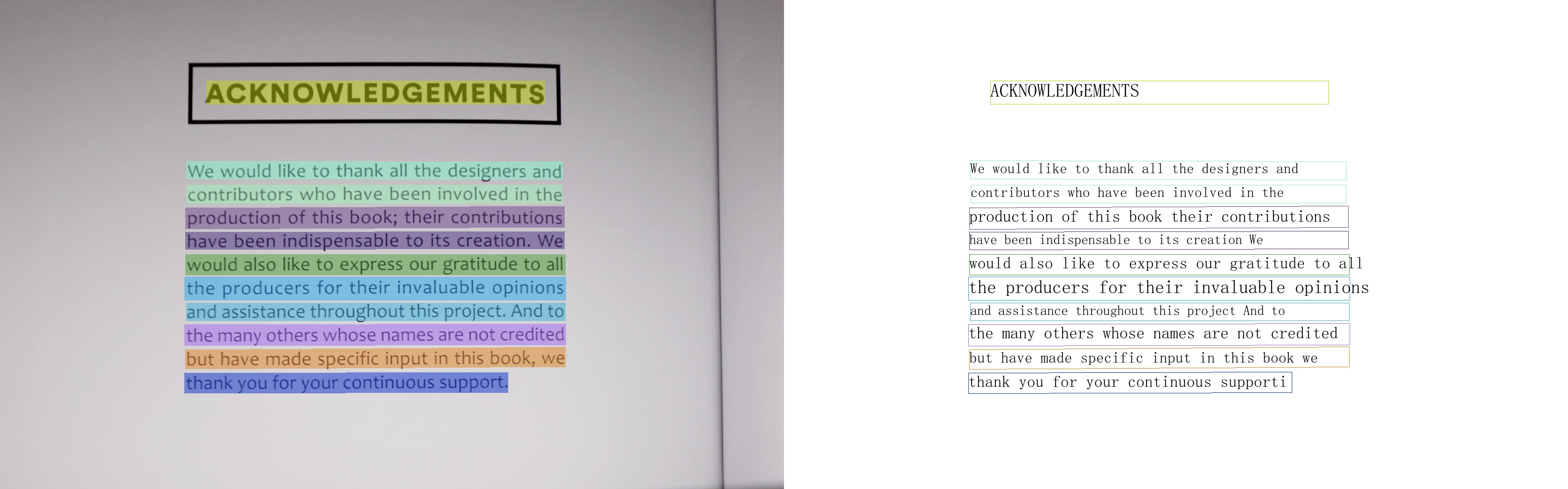

"The task of Text recognition is to recognize the text content in the image. Generally, input the image text area cut from the text box obtained by text detection. Text Recognition can generally be divided into **regular text recognition** and **irregular text recognition** according to the shape of the text to be recognized. Regular text mainly refers to printed font, scanned text, etc., and the text is roughly in the horizontal line position; Irregular text is often not in the horizontal position, and there are problems such as bending, occlusion, blur and so on. Irregular text scene is very challenging, and it is also the main research direction in the field of text tecognition.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 9 (left) regular text vs. (right) irregular text</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

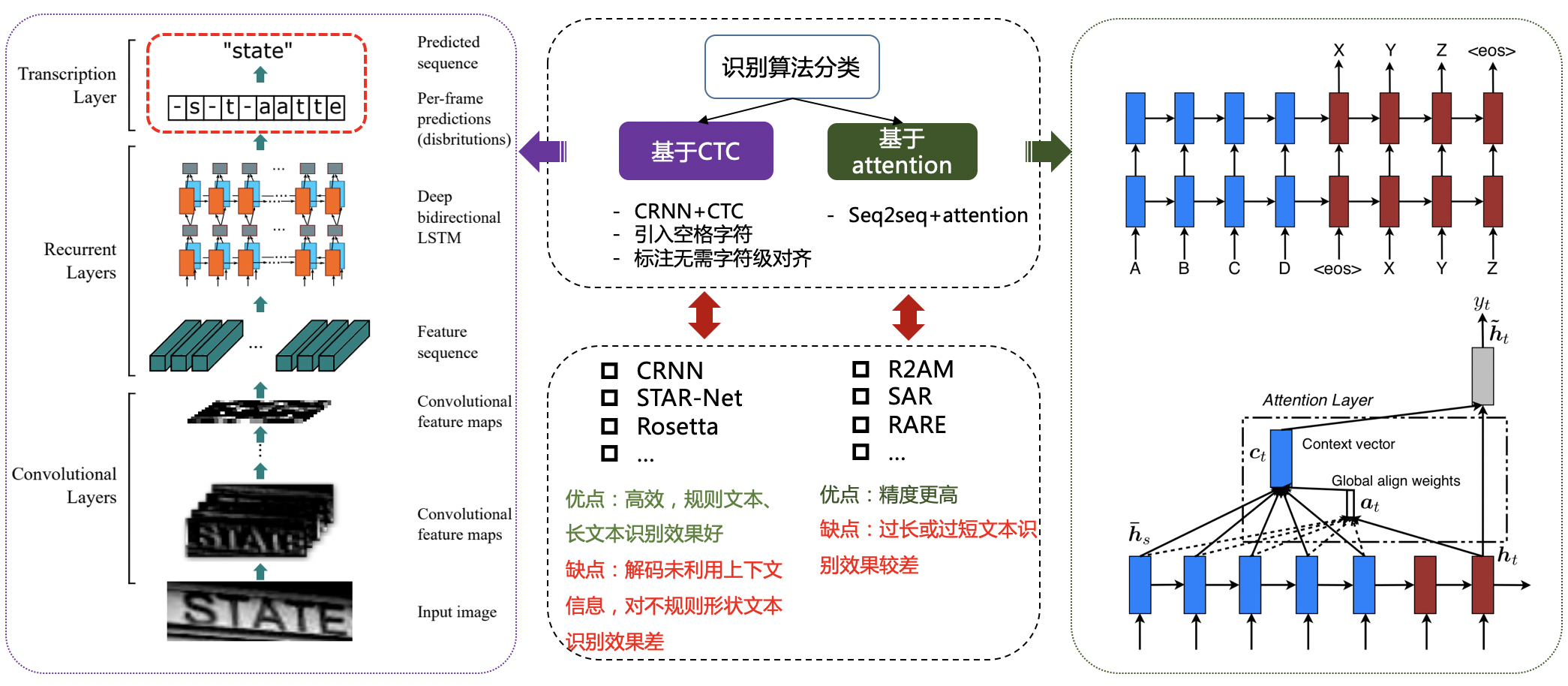

"According to different decoding methods, the algorithms of regular Text Recognition can be roughly divided into two types: CTC based and sequence2sequence based. The processing methods of transforming the sequence features learned by the network into the final recognition results are different. The CTC based algorithm is represented by the classical crnn [11].\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 10 CTC based recognition algorithm vs. attention based recognition algorithm</center>\n",

|

||

"\n",

|

||

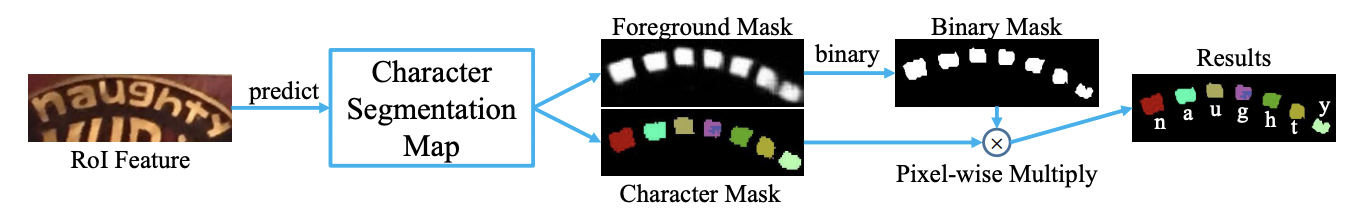

"Irregular Text Recognition algorithms are more abundant than others. For example, star net [12] and other methods correct irregular text into regular rectangles by adding TPS and other correction modules; Rare [13] and other attention based methods have enhanced the attention to the correlation between various parts of the sequence; The segmentation based method takes each character of the text line as an independent individual, which is easier to recognize the segmented single character than the recognition after correcting the whole text line; In addition, with the rapid development of transfomer [14] and its effectiveness verification in various tasks in recent years, a number of Text Recognition algorithms based on transformer have emerged. These methods use transformer structure to solve the limitations of CNN in long dependency modeling, and have achieved good results.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure. 11 recognition algorithm based on character segmentation [15]</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"The technologies related to Text Recognition will be explained and practiced in detail in Chapter 3.\n",

|

||

"\n",

|

||

"## 2.3 Document Structure Identification\n",

|

||

"\n",

|

||

"OCR technology in the traditional sense can meet the requirements of text detection and recognition, but in practical application scenarios, the final information to be obtained is often structured information, such as ID card and invoice information formatting and extraction, structured identification of forms, etc., mostly in express document extraction, contract content comparison, financial factoring sheet information comparison It is applied in scenarios such as document identification in logistics industry. OCR result + post-processing is a common structured scheme, but the process is often complex, and the post-processing needs fine design and poor generalization. With the gradual maturity of OCR technology and the increasing demand for structured information extraction, various technologies on intelligent document analysis, such as layout analysis, table recognition and key information extraction, have attracted more and more attention and research.\n",

|

||

"\n",

|

||

"* **Layout Analysis**\n",

|

||

"\n",

|

||

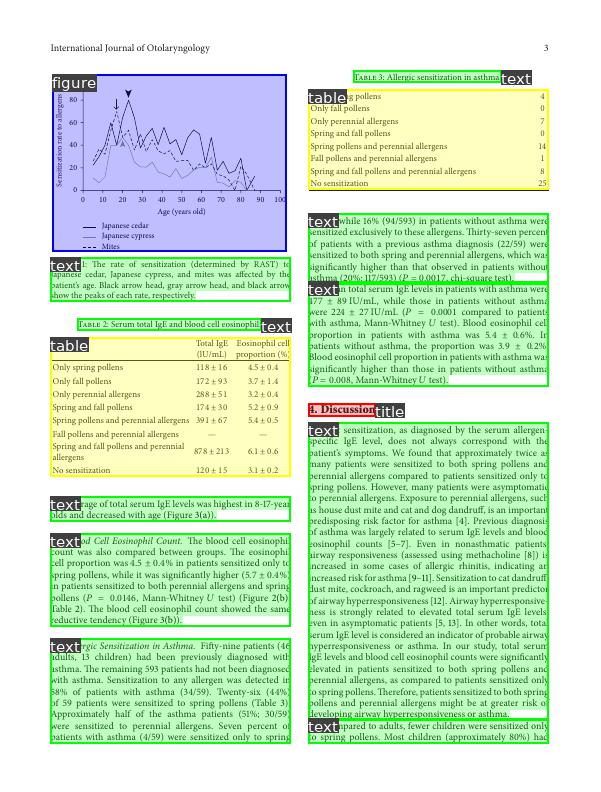

"Layout analysis is mainly used to classify the content of document images. Generally, the categories can be divided into plain text, title, table, picture, etc. Existing methods generally detect or segment different plates in the document as different targets. For example, Soto Carlos [16] improves the region detection performance by combining the context information and using the inherent location information of the document content on the basis of the target detection algorithm fast r-cnn; Sarkar mausoom [17] and others proposed a priori based segmentation mechanism to train the document segmentation model on very high-resolution images, which solves the problem that different structures of dense regions cannot be distinguished and then merged due to excessive reduction of the original image.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 12 Schematic Diagram of Layout Analysis Task</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

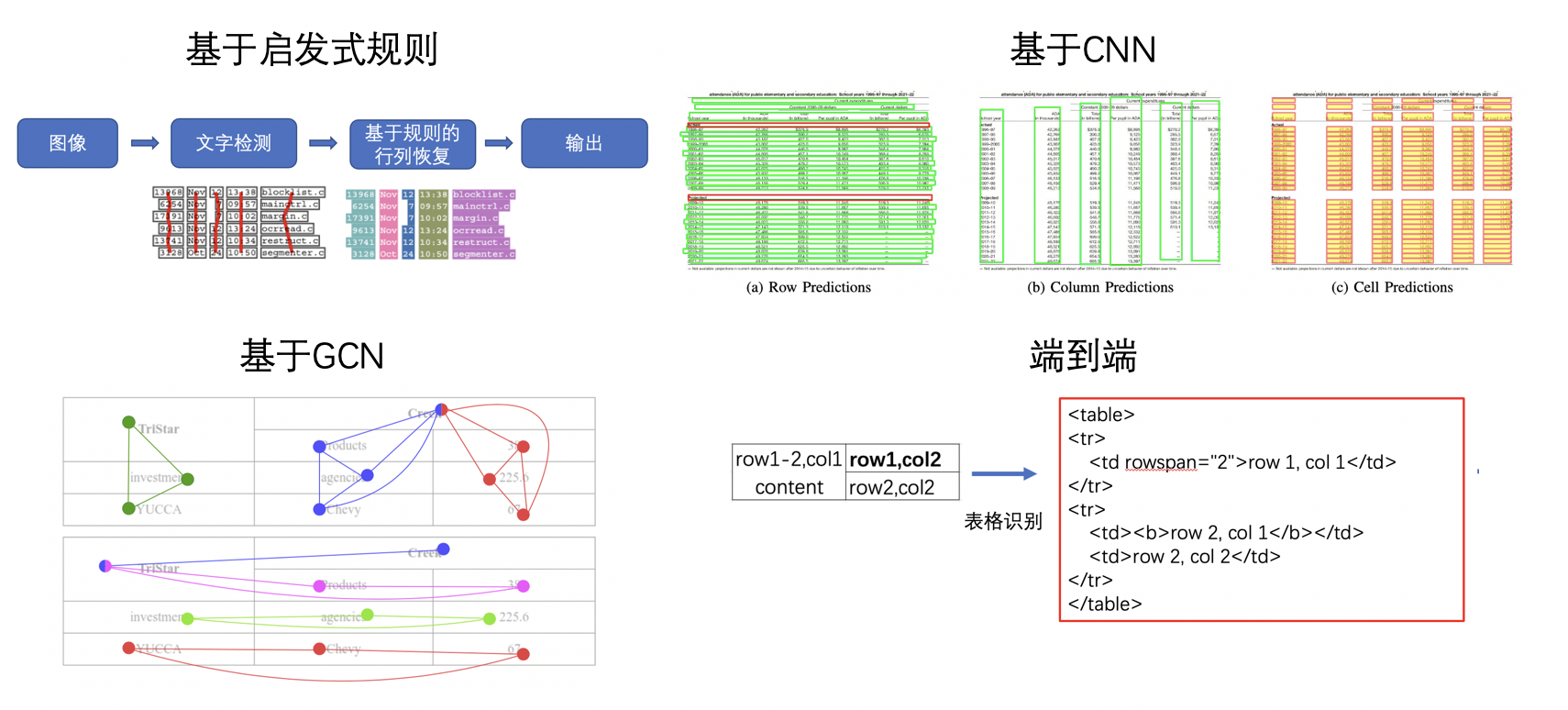

"* **Table Recognition**\n",

|

||

"\n",

|

||

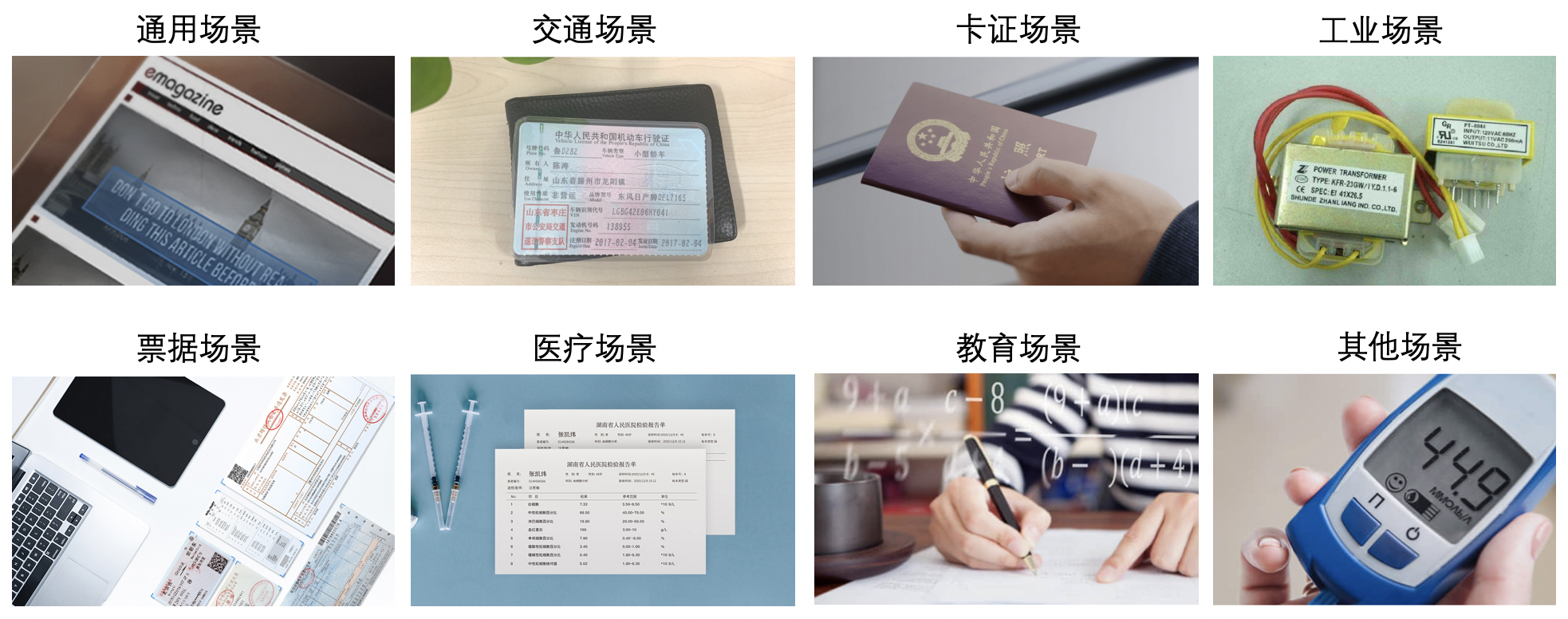

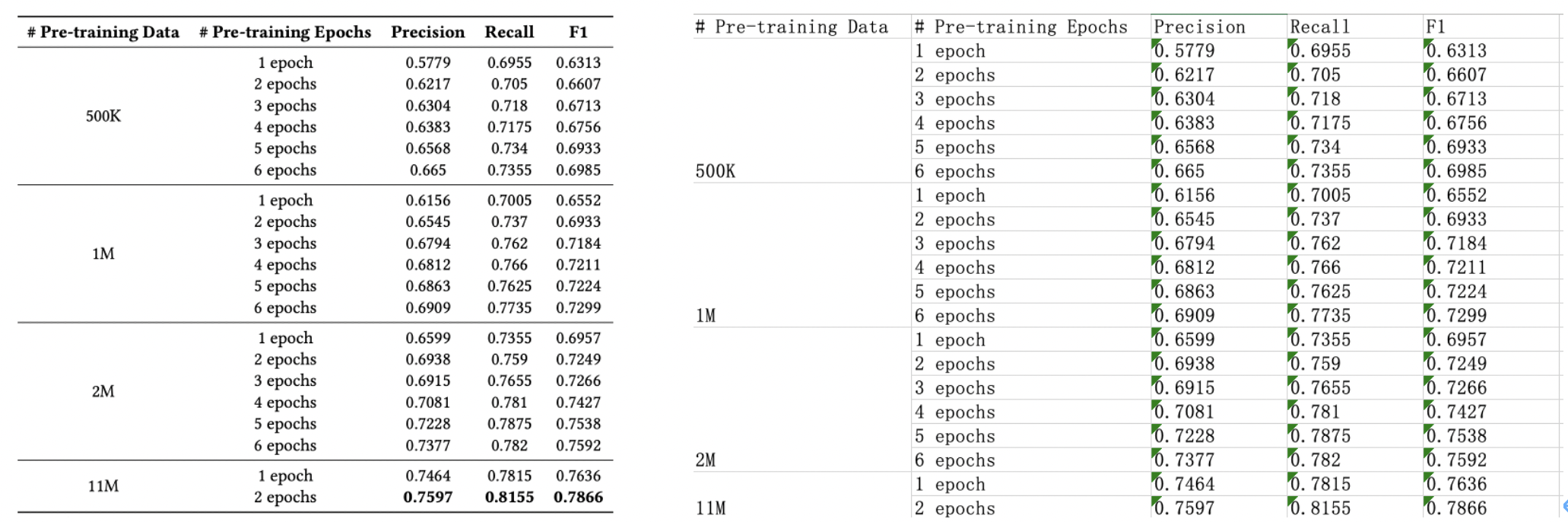

"The task of table recognition is to recognize and convert the table information in the document into excel file. The types and styles of tables in text images are complex and diverse, such as different row and column merging, different content text types, etc. in addition, the style of documents and the lighting environment during shooting have brought great challenges to table recognition. These challenges make table recognition always a research difficulty in the field of document understanding.\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 13 Schematic diagram of table recognition task</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"There are many kinds of methods for table recognition. The early traditional algorithms based on heuristic rules, such as t-rect and other algorithms proposed by kieninger [18] and others, are generally processed through manual design rules and connected domain detection and analysis; In recent years, with the development of deep learning, some CNN based table structure recognition algorithms have begun to emerge, such as deep tabstr proposed by Siddiqui Shoaib Ahmed [19] and tabstruct net proposed by Raja Sachin [20]; In addition, with the rise of graph neural network, some researchers try to apply graph neural network to table structure recognition. Based on graph neural network, table recognition is regarded as a graph reconstruction problem, such as tgrnet proposed by Xue Wenyuan [21]; The end-to-end method directly uses the network to complete the HTML representation output of the table structure. Most end-to-end methods use the seq2seq method to complete the prediction of the table structure, such as some methods based on attention or transformer, such as tablemaster [22].\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Fig. 14 Schematic diagram of table recognition method</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"* **Key Information Extraction**\n",

|

||

"\n",

|

||

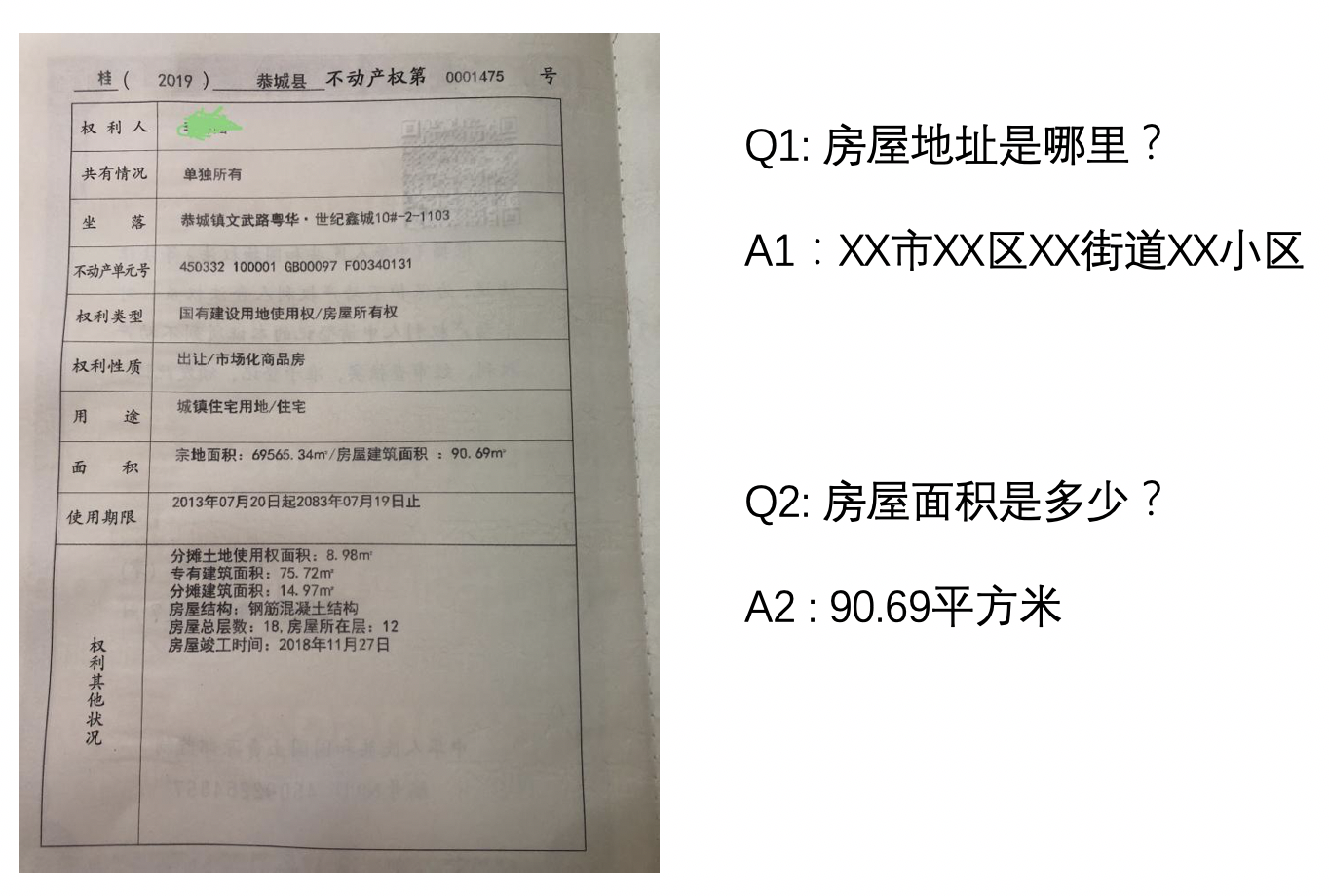

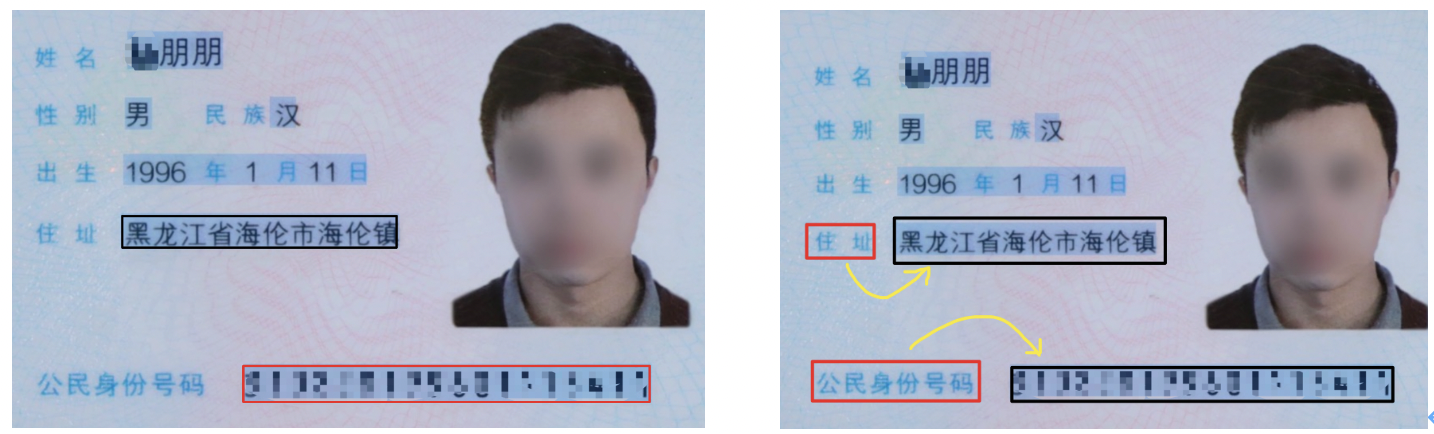

"Key information extraction (KIE) is an important task in document VQA. It mainly extracts the required key information from the image, such as the name and ID number information extracted from the ID card. The types of such information are often fixed under specific tasks, but they are different between different tasks.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 15 Schematic diagram of docvqa tasks</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"KIE is usually divided into two sub tasks:\n",

|

||

"\n",

|

||

"-Ser: semantic entity recognition, which classifies each detected text, such as name and ID card. See the black box and red box in the figure below.\n",

|

||

"-Re: relation extraction, which classifies each detected text, such as dividing it into questions and answers. Then find the corresponding answer to each question. As shown in the figure below, the red box and black box represent the question and answer respectively, and the yellow line represents the corresponding relationship between the question and answer.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 16 Ser and re tasks</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"General KIE methods are based on named entity recognition (NER) [4], but these methods only use the text information in the image and lack the use of visual and structural information, so the accuracy is not high. On this basis, the methods in recent years have begun to integrate visual and structural information with text information. According to the principle adopted in the fusion of multimodal information, these methods can be divided into the following four types:\n",

|

||

"\n",

|

||

"-Grid based method\n",

|

||

"-Token based method\n",

|

||

"-GCN based method\n",

|

||

"-End to end based method\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"The related technologies of document analysis will be explained and practiced in detail in Chapter 6.\n",

|

||

"\n",

|

||

"## 2.4 Other related technologies\n",

|

||

"Three key technologies in the field of OCR are mainly introduced: text detection, Text Recognition and document structured recognition. More other cutting-edge technologies related to OCR are introduced, including end-to-end Text Recognition, image preprocessing technology in OCR, OCR data synthesis, etc. Please refer to Chapter 7 and Chapter 8 of the tutorial.\n"

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"metadata": {},

|

||

"source": [

|

||

"# 3.Industrial practice of OCR technology\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

">You are Xiao Wang. What should I do?\n",

|

||

"> 1. I won't, I can't, I quit 😭\n",

|

||

"> 2. Suggest the boss find an outsourcing company or a commercialization scheme. Anyway, it will cost the boss's money 😊\n",

|

||

"> 3. Find similar projects online and program for GitHub 😏\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"OCR technology will eventually fall into industrial practice. Although there are many academic studies on OCR technology, and the commercial application of OCR technology has been relatively mature compared with other AI technologies, there are still some difficulties and challenges in the actual industrial application. The following will be analyzed from the perspectives of technology and industrial practice.\n",

|

||

"\n",

|

||

"\n",

|

||

"## 3.1 Difficulties in industrial practice\n",

|

||

"In actual industrial practice, developers often need to rely on open source community resources to start or promote projects, and developers often face three major problems when using open source models:\n",

|

||

"\n",

|

||

"<center>Figure 17 three problems in OCR technology industry practice</center>\n",

|

||

"\n",

|

||

"**1. Not found or selected**\n",

|

||

"\n",

|

||

"The open source community is rich in resources, but the information asymmetry leads to developers' inability to effectively solve the pain point problem. On the one hand, the resources of the open source community are too rich, and developers can't quickly find projects matching business requirements from a large number of code warehouses, that is, there is a problem of \"not finding\"; On the other hand, in algorithm selection, the indicators on the English public dataset can not provide a direct reference to the Chinese scenes often faced by developers. Algorithm by algorithm verification requires a lot of time and manpower, and it can not guarantee to select the most appropriate algorithm, that is, \"can not be selected\".\n",

|

||

"\n",

|

||

"**2.Not applicable to industrial scenarios**\n",

|

||

"\n",

|

||

"开源社区中的工作往往更多地偏向效果优化,如学术论文代码开源或复现,一般更侧重算法效果,平衡考虑模型大小和速度的工作相比就少很多,而模型大小和预测耗时在产业实践中是两项不容忽视的指标,其重要程度不亚于模型效果。无论是移动端和服务器端,待识别的图像数目往往非常多,都希望模型更小,精度更高,预测速度更快。GPU太贵,最好使用CPU跑起来更经济。在满足业务需求的前提下,模型越轻量占用的资源越少。\n",

|

||

"\n",

|

||

"**3. Difficult optimization and many training deployment problems**\n",

|

||

"\n",

|

||

"The open source community is rich in resources, but the information asymmetry leads to developers' inability to effectively solve the pain point problem. On the one hand, the resources of the open source community are too rich, and developers can't quickly find projects matching business requirements from a large number of code warehouses, that is, there is a problem of \"not finding\"; On the other hand, in algorithm selection, the indicators on the English public dataset can not provide a direct reference to the Chinese scenes often faced by developers. Algorithm by algorithm verification requires a lot of time and manpower, and it can not guarantee to select the most appropriate algorithm, that is, \"can not be selected\".\n",

|

||

"\n",

|

||

"## 3.2 产业级OCR开发套件PaddleOCR\n",

|

||

"\n",

|

||

"OCR industry practice needs a set of complete and whole process solutions to speed up R & D progress and save valuable R & D time. In other words, the ultra lightweight model and its whole process solution are just needed, especially for mobile terminals and embedded devices with limited computing power and storage space.\n",

|

||

"\n",

|

||

"In this context, the industrial OCR development kit [paddleocr]( https://github.com/PaddlePaddle/PaddleOCR )came into being.\n",

|

||

"\n",

|

||

"The construction idea of paddleocr starts from the user portrait and needs, relying on the core framework of the propeller, selects and replicates rich cutting-edge algorithms, develops PP characteristic models more suitable for industrial landing based on the reproduced algorithms, integrates training and promotion, and provides a variety of prediction deployment methods to meet the different demand scenarios of practical application.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 18 Panorama of PaddleOCR Development Kit</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

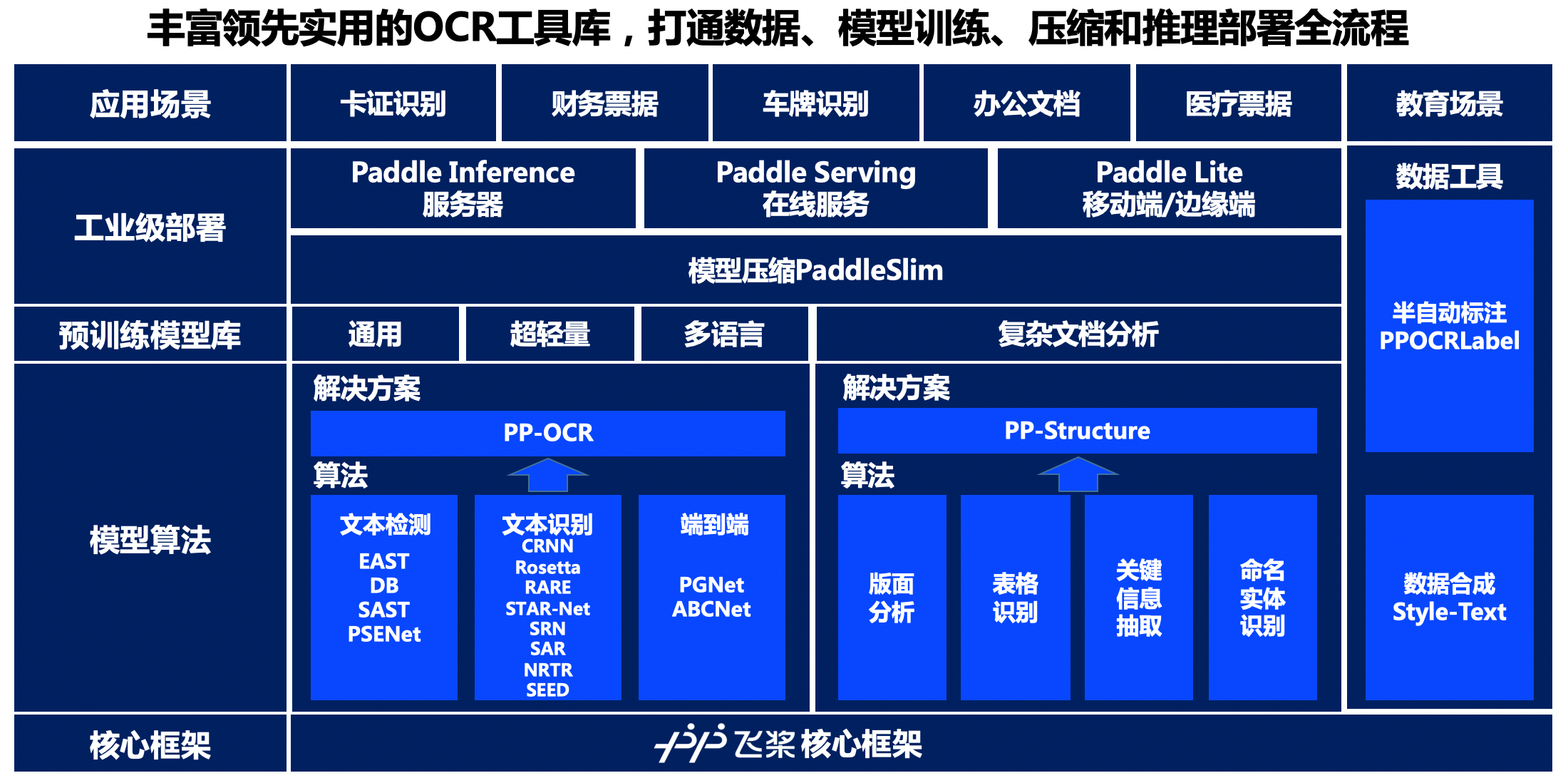

"As can be seen from the panorama, paddleocr relies on the core framework of the propeller, provides rich solutions at the levels of model algorithm, pre training model library and industrial deployment, and provides data synthesis and semi-automatic data annotation tools to meet the data production needs of developers.\n",

|

||

"\n",

|

||

"**At the model algorithm level**, paddleocr provides solutions for **text detection and recognition** and **document structural analysis**. In terms of text detection and recognition, paddleocr reproduces or opens source four text detection algorithms, eight Text Recognition algorithms and one end-to-end Text Recognition algorithm, and develops PP-OCR series general text detection and recognition solutions on this basis; In the aspect of document structure analysis, paddleocr provides algorithms such as layout analysis, table recognition, key information extraction and named entity recognition, and puts forward the PP structure document analysis solution. Rich selection algorithms can meet the needs of developers in different business scenarios. The unification of code framework also facilitates developers to optimize and compare the performance of different algorithms.\n",

|

||

"\n",

|

||

"**At the model algorithm level**, paddleocr provides solutions for **text detection and recognition** and **document structural analysis**. In terms of text detection and recognition, paddleocr reproduces or opens source four text detection algorithms, eight Text Recognition algorithms and one end-to-end Text Recognition algorithm, and develops PP-OCR series general text detection and recognition solutions on this basis; In the aspect of document structure analysis, paddleocr provides algorithms such as layout analysis, table recognition, key information extraction and named entity recognition, and puts forward the PP structure document analysis solution. Rich selection algorithms can meet the needs of developers in different business scenarios. The unification of code framework also facilitates developers to optimize and compare the performance of different algorithms.\n",

|

||

"\n",

|

||

"**At the industrial deployment level**, paddleocr provides a server-side prediction scheme based on paddle inference, a service-oriented deployment scheme based on paddle serving, and an end-side deployment scheme based on paddle Lite to meet the deployment requirements in different hardware environments. At the same time, it provides a model compression scheme based on paddleslim, which can further compress the model size. The above deployment methods have completed the whole process of training and promotion, so as to ensure that developers can deploy efficiently, stably and reliably.\n",

|

||

"\n",

|

||

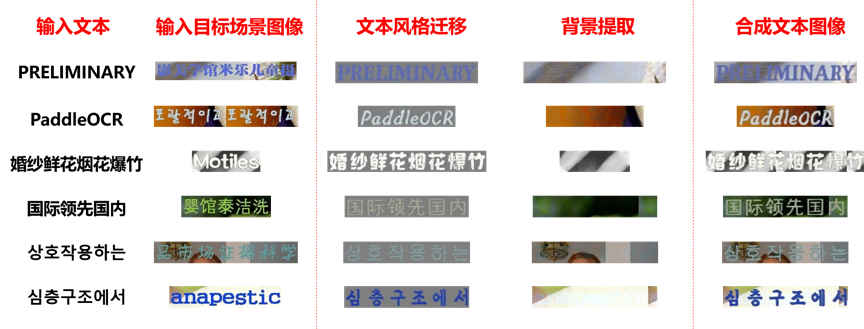

"**At the data tool level**, paddleocr provides a semi-automatic data annotation tool ppocrlabel and a data synthesis tool style text to help developers more conveniently train the data sets and annotation information required for production models. As the first open source semi-automatic OCR data annotation tool in the industry, ppocrlabel has built-in PP-OCR model to realize the annotation mode of pre annotation + manual verification, which can greatly improve the annotation efficiency and save labor cost. The data synthesis tool style text mainly solves the problem that the real data of the actual scene is seriously insufficient, and the traditional synthesis algorithm can not synthesize the text style (font, color, spacing and background). Only a few target scene images are needed, and a large number of text images similar to the style of the target scene can be synthesized in batches.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 19 Schematic diagram of ppocrlabel</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 20 Example of style text synthesis effect</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"### 3.2.1 PP-OCR and PP-Structrue\n",

|

||

"\n",

|

||

"PP series characteristic model is a model that deeply optimizes each visual development kit of the propeller according to the needs of industrial practice, striving to balance speed and precision. PP series feature models in paddleocr include PP-OCR series models for text detection and recognition tasks and PP structure series models for document analysis.\n",

|

||

"\n",

|

||

"**(1) PP-OCR Chinese English Model**\n",

|

||

"\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 21 example of PP-OCR Chinese and English model recognition results</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

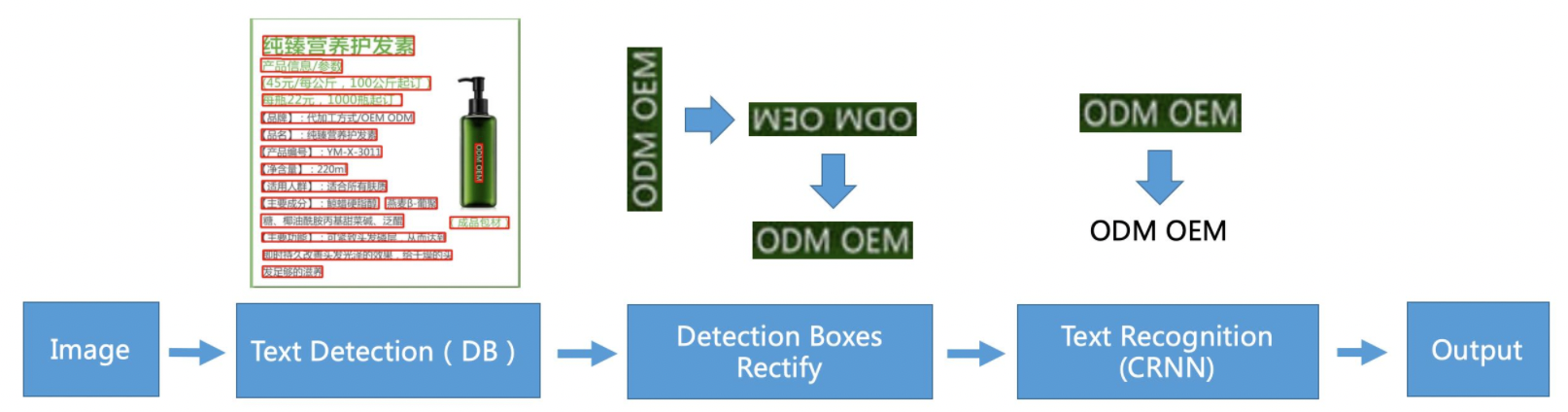

"The typical two-stage OCR algorithm used in PP-OCR Chinese and English model is the composition of detection model + recognition model. The specific algorithm framework is as follows:\n",

|

||

"\n",

|

||

"<center>Figure 22 pipeline diagram of PP-OCR system</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

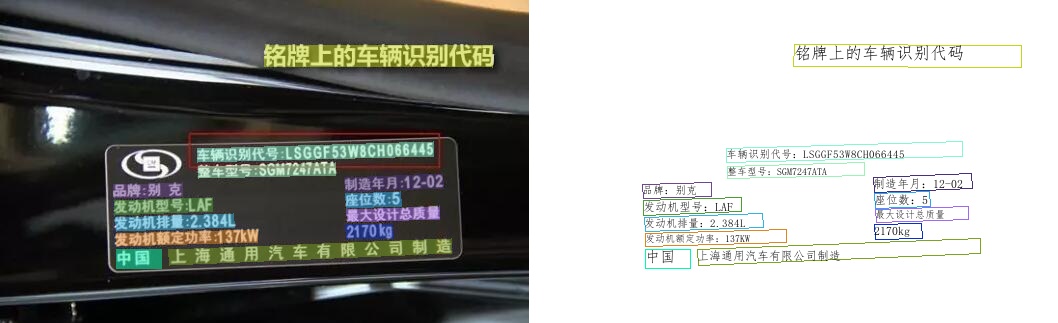

"It can be seen that in addition to input and output, PP-OCR core framework includes three modules: text detection module, detection box correction module and Text Recognition module.\n",

|

||

"-Text detection module: the core is based on [DB]( https://arxiv.org/abs/1911.08947 )The text detection model trained by the detection algorithm detects the text region in the image;\n",

|

||

"-Detection box correction module: input the detected text box into the detection box correction module. At this stage, the text box represented by four points is corrected into a rectangular box to facilitate subsequent Text Recognition. On the other hand, it will judge and correct the text direction. For example, if it is judged that the text line is inverted, it will become a positive, This function is realized by training a text direction classifier;\n",

|

||

"-Text Recognition module: finally, the Text Recognition module performs Text Recognition on the corrected detection box to obtain the text content in each text box. The classical Text Recognition algorithm [crnn] used in PP-OCR( https://arxiv.org/abs/1507.05717 )。\n",

|

||

"\n",

|

||

"Paddleocr successively launched PP-OCR [23] and PP-OCRv2 [24] models.\n",

|

||

"\n",

|

||

"PP-OCR model is divided into mobile version (lightweight version) and server version (general version). The mobile version model is mainly optimized based on the lightweight backbone network mobilenetv3. The size of the optimized model (detection model + text direction classification model + recognition model) is only 8.1m. The average prediction time of a single image on CPU is 350ms, and about 110ms on T4 GPU. After cutting and quantization, It can be further compressed to 3.5m with the same accuracy, which is convenient for end-to-side deployment. The test and prediction time on Xiaolong 855 is only 260ms. More PP-OCR evaluation data can be referred to[benchmark](https://github.com/PaddlePaddle/PaddleOCR/blob/release/2.2/doc/doc_ch/benchmark.md)。\n",

|

||

"\n",

|

||

"PP-OCRv2 maintains the overall framework of PP-OCR and mainly makes further strategy optimization in effect. The improvement includes three aspects:\n",

|

||

"- In terms of model effect, compared with PP-OCR mobile version, it has been improved by more than 7%;\n",

|

||

"- In terms of speed, compared with the PP-OCR server version, it has been improved by more than 220%;\n",

|

||

"- In terms of model size, the total size of 11.6m can be easily deployed on the server side and mobile side.\n",

|

||

"\n",

|

||

"The specific optimization strategies of PP-OCR and PP-OCRv2 will be explained in detail in Chapter 4.\n",

|

||

"In addition to Chinese and English models, paddleocr also trains and opens source English digital models and multilingual recognition models based on different data sets. The above models are ultra lightweight and suitable for different language scenarios.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 23 schematic diagram of recognition effect of English digital model and multilingual model of PP-OCR</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"**(2)PP-Structure Document analysis Model**\n",

|

||

"\n",

|

||

"PP-Structure Support layout analysis 、 table recognition 、 DocVQA Three sub tasks.\n",

|

||

"\n",

|

||

"The core function points of PP structure are as follows:\n",

|

||

"- It supports layout analysis of documents in the form of pictures, and can be divided into five areas: text, title, table, picture and list (used in combination with layout parser)\n",

|

||

"- Support text, title, picture and list area extraction as text fields (used in combination with PP-OCR)\n",

|

||

"- Support structured analysis in the table area, and output the final result to excel file\n",

|

||

"- It supports Python WHL package and command line, which is simple and easy to use\n",

|

||

"- It supports two types of task customization training: layout analysis and table structure\n",

|

||

"- Support VQA tasks - Ser and re\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 24 schematic diagram of PP structure system (this figure only includes layout analysis + table identification)</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"The specific scheme of PP structure will be explained in detail in Chapter 6.\n",

|

||

"\n",

|

||

"### 3.2.2 Industrial Deployment Scheme\n",

|

||

"\n",

|

||

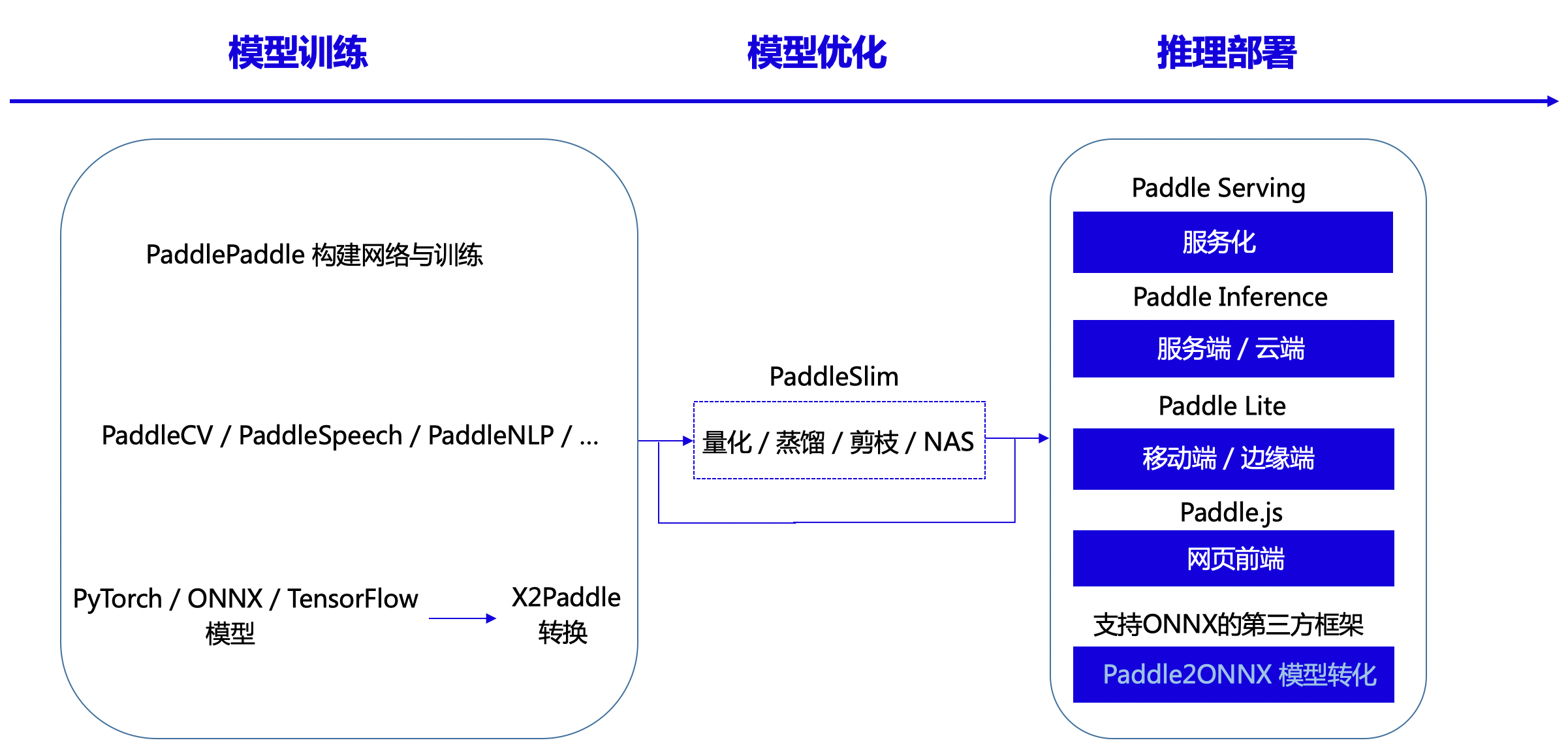

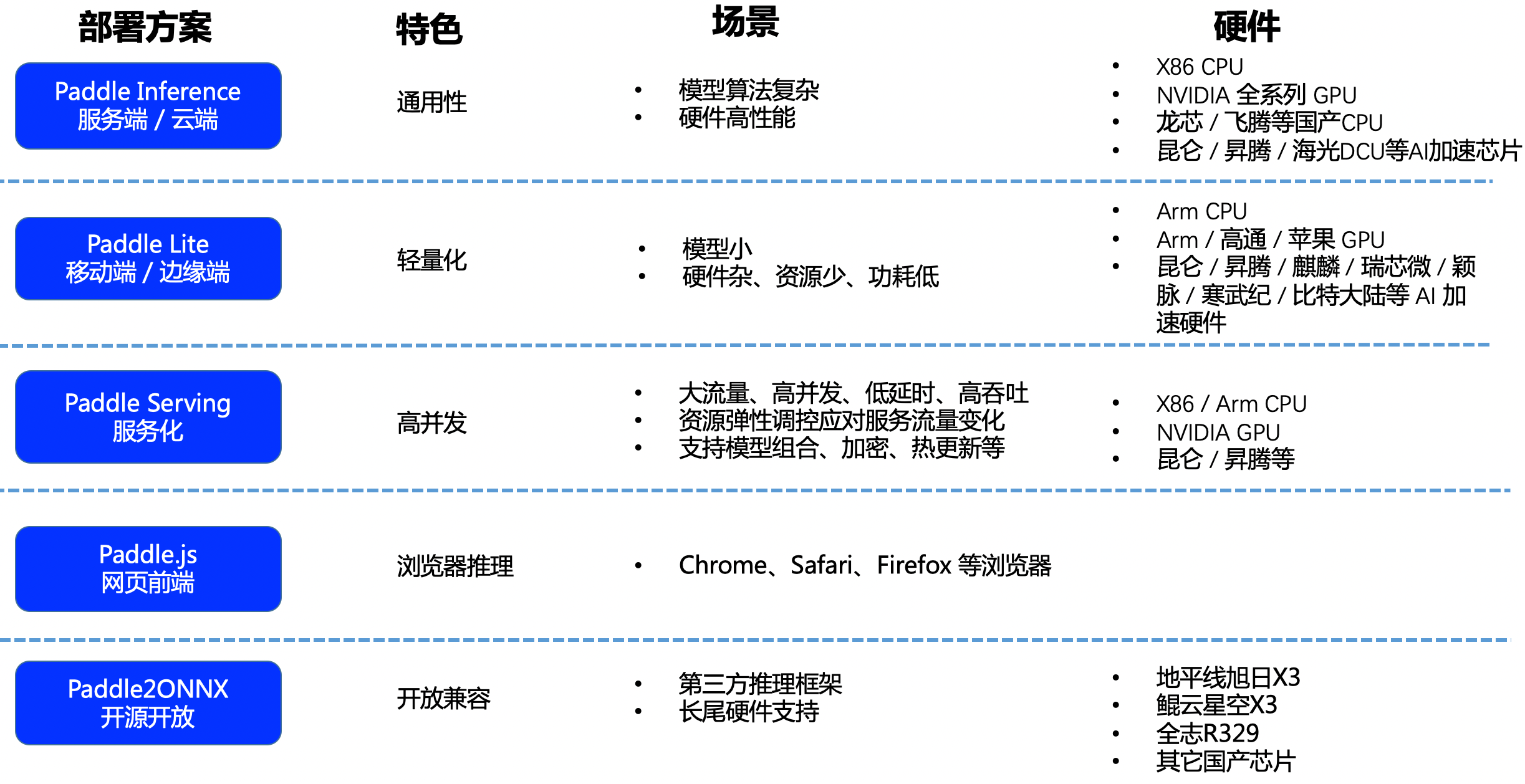

"The flying propeller supports the whole process and the whole scene reasoning and deployment. The source of the model is mainly divided into three kinds. The first one is to use PaddlePaddle API to build the network structure for training, the second is based on the flying propeller assembly series, and the flying propeller kit provides a rich model library, simple and easy to use API, and has the open box, namely the visual model library PaddleCV, Intelligent speech library paddlespeech and natural language processing library paddlenlp, etc. the third model is produced from the third-party framework (pytorh, onnx, tensorflow, etc.) using x2paddle tool.\n",

|

||

"\n",

|

||

"The paddle model can be compressed, quantified and distilled by using the paddleslim tool. It supports five deployment schemes: service-oriented paddle serving, server / cloud paddle inference, mobile / edge paddle lite and web front-end paddle JS, for hardware not supported by paddle, such as MCU, horizon, Kunyun and other domestic chips, paddle2onnx can be transformed into a third-party framework supporting onnx.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 25 deployment mode of propeller support</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"Paddle inference supports server-side and cloud deployment, with high performance and versatility. It has been deeply adapted and optimized for different platforms and different application scenarios. Paddle inference is the original reasoning base of the propeller, which ensures that the model can be trained and used on the server side and deployed quickly. It is suitable for deploying complex models with multiple application language environments on high-performance hardware, The hardware covers x86 CPU, NVIDIA GPU, baidu Kunlun XPU, Huawei shengteng and other AI accelerators.\n",

|

||

"Paddle Lite is an end-side reasoning engine with lightweight and high-performance characteristics. It has been deeply configured and optimized for end-side equipment and various application scenarios. At present, it supports Android, IOS, embedded Linux devices, MacOS and other platforms. The hardware covers ARM CPU and GPU, x86 CPU and new hardware, such as Baidu Kunlun, Huawei shengteng and Kirin, Ruixin micro, etc.\n",

|

||

"Paddy serving is a high-performance service framework designed to help users quickly deploy the model in the cloud in several steps. At present, paddle serving supports customized pre-processing and post-processing, model combination, model hot loading and updating, multi machine, multi card and multi model, distributed reasoning, k8s deployment, security gateway and model encryption deployment, and supports multi language and multi client access. Paddle serving official also provides deployment examples of more than 40 models, including paddleocr, to help users get started faster.\n",

|

||

"\n",

|

||

"\n",

|

||

"<center>Figure 26 deployment mode of propeller support</center>\n",

|

||

"\n",

|

||

"<br>\n",

|

||

"\n",

|

||

"The above deployment scheme will be explained and practiced in detail based on PP-OCRv2 model in Chapter 5."

|

||

]

|

||

},

|

||

{

|

||

"cell_type": "markdown",

|

||

"metadata": {},

|

||

"source": [

|

||

"# 4. Summary\n",

|

||

"This section first introduces the application scenarios and cutting-edge algorithms of OCR technology, and then analyzes the difficulties and three challenges of OCR technology in industrial practice.\n",

|

||

"\n",

|

||

"The contents of subsequent chapters of this tutorial are arranged as follows:\n",

|

||

"\n",

|

||

"* The second and third chapters respectively introduce the detection and recognition technology and practice;\n",

|

||

"* The fourth chapter introduces the PP-OCR optimization strategy;\n",

|

||

"* The fifth chapter carries out forecast deployment and actual combat;\n",

|

||

"* Chapter 6 introduces document structure;\n",

|

||

"* Chapter 7 introduces other OCR related algorithms such as end-to-end, data preprocessing and data synthesis;\n",

|

||

"* Chapter 8 introduces OCR related data sets and data synthesis tools.\n",

|

||

"\n",

|

||

"# Reference\n",

|

||

"\n",

|

||

"[1] Liao, Minghui, et al. \"Textboxes: A fast text detector with a single deep neural network.\" Thirty-first AAAI conference on artificial intelligence. 2017.\n",

|

||

"\n",

|

||

"[2] Liu W, Anguelov D, Erhan D, et al. Ssd: Single shot multibox detector[C]//European conference on computer vision. Springer, Cham, 2016: 21-37.\n",

|

||

"\n",

|

||

"[3] Tian, Zhi, et al. \"Detecting text in natural image with connectionist text proposal network.\" European conference on computer vision. Springer, Cham, 2016.\n",

|

||

"\n",

|

||

"[4] Ren S, He K, Girshick R, et al. Faster r-cnn: Towards real-time object detection with region proposal networks[J]. Advances in neural information processing systems, 2015, 28: 91-99.\n",

|

||

"\n",

|

||

"[5] Zhou, Xinyu, et al. \"East: an efficient and accurate scene text detector.\" Proceedings of the IEEE conference on Computer Vision and Pattern Recognition. 2017.\n",

|

||

"\n",

|

||

"[6] Wang, Wenhai, et al. \"Shape robust text detection with progressive scale expansion network.\" Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019.\n",

|

||

"\n",

|

||

"[7] Liao, Minghui, et al. \"Real-time scene text detection with differentiable binarization.\" Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. No. 07. 2020.\n",

|

||

"\n",

|

||

"[8] Deng, Dan, et al. \"Pixellink: Detecting scene text via instance segmentation.\" Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 32. No. 1. 2018.\n",

|

||

"\n",

|

||

"[9] He K, Gkioxari G, Dollár P, et al. Mask r-cnn[C]//Proceedings of the IEEE international conference on computer vision. 2017: 2961-2969.\n",

|

||

"\n",

|

||

"[10] Wang P, Zhang C, Qi F, et al. A single-shot arbitrarily-shaped text detector based on context attended multi-task \n",

|

||

"learning[C]//Proceedings of the 27th ACM international conference on multimedia. 2019: 1277-1285.\n",

|

||

"\n",

|

||

"[11] Shi, B., Bai, X., & Yao, C. (2016). An end-to-end trainable neural network for image-based sequence recognition and its application to scene Text Recognition. IEEE transactions on pattern analysis and machine intelligence, 39(11), 2298-2304.\n",

|

||

"\n",

|

||

"[12] Star-Net Max Jaderberg, Karen Simonyan, Andrew Zisserman, et al. Spa- tial transformer networks. In Advances in neural information processing systems, pages 2017–2025, 2015.\n",

|

||

"\n",

|

||

"[13] Shi, B., Wang, X., Lyu, P., Yao, C., & Bai, X. (2016). Robust scene Text Recognition with automatic rectification. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 4168-4176).\n",

|

||

"\n",

|

||

"[14] Sheng, F., Chen, Z., & Xu, B. (2019, September). NRTR: A no-recurrence sequence-to-sequence model for scene Text Recognition. In 2019 International Conference on Document Analysis and Recognition (ICDAR) (pp. 781-786). IEEE.\n",

|

||

"\n",

|

||

"[15] Lyu P, Liao M, Yao C, et al. Mask textspotter: An end-to-end trainable neural network for spotting text with arbitrary shapes[C]//Proceedings of the European Conference on Computer Vision (ECCV). 2018: 67-83.\n",

|

||

"\n",

|

||

"[16] Soto C, Yoo S. Visual detection with context for document layout analysis[C]//Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP). 2019: 3464-3470.\n",

|

||

"\n",

|

||

"[17] Sarkar M, Aggarwal M, Jain A, et al. Document Structure Extraction using Prior based High Resolution Hierarchical Semantic Segmentation[C]//European Conference on Computer Vision. Springer, Cham, 2020: 649-666.\n",

|

||

"\n",

|

||

"[18] Kieninger T, Dengel A. A paper-to-HTML table converting system[C]//Proceedings of document analysis systems (DAS). 1998, 98: 356-365.\n",

|

||

"\n",

|

||

"[19] Siddiqui S A, Fateh I A, Rizvi S T R, et al. Deeptabstr: Deep learning based table structure recognition[C]//2019 International Conference on Document Analysis and Recognition (ICDAR). IEEE, 2019: 1403-1409.\n",

|

||

"\n",

|

||

"[20] Raja S, Mondal A, Jawahar C V. Table structure recognition using top-down and bottom-up cues[C]//European Conference on Computer Vision. Springer, Cham, 2020: 70-86.\n",

|

||

"\n",

|

||

"[21] Xue W, Yu B, Wang W, et al. TGRNet: A Table Graph Reconstruction Network for Table Structure Recognition[J]. arXiv preprint arXiv:2106.10598, 2021.\n",

|

||

"\n",

|

||

"[22] Ye J, Qi X, He Y, et al. PingAn-VCGroup's Solution for ICDAR 2021 Competition on Scientific Literature Parsing Task B: Table Recognition to HTML[J]. arXiv preprint arXiv:2105.01848, 2021.\n",

|

||

"\n",

|

||

"[23] Du Y, Li C, Guo R, et al. PP-OCR: A practical ultra lightweight OCR system[J]. arXiv preprint arXiv:2009.09941, 2020.\n",

|

||

"\n",

|

||

"[24] Du Y, Li C, Guo R, et al. PP-OCRv2: Bag of Tricks for Ultra Lightweight OCR System[J]. arXiv preprint arXiv:2109.03144, 2021.\n",

|

||

"\n"

|

||

]

|

||

}

|

||

],

|

||

"metadata": {

|

||

"kernelspec": {

|

||

"display_name": "p2",

|

||

"language": "python",

|

||

"name": "p2"

|

||

},

|

||

"language_info": {

|

||

"codemirror_mode": {

|

||

"name": "ipython",

|

||

"version": 3

|

||

},

|

||

"file_extension": ".py",

|

||

"mimetype": "text/x-python",

|

||

"name": "python",

|

||

"nbconvert_exporter": "python",

|

||

"pygments_lexer": "ipython3",

|

||

"version": "3.7.11"

|

||

}

|

||

},

|

||

"nbformat": 4,

|

||

"nbformat_minor": 4

|

||

}

|